We are investigating how SX-Aurora works on variouse ML applications. In this post, we would like to share the result of performance evaluation of three ML workloads using TensorFlow.

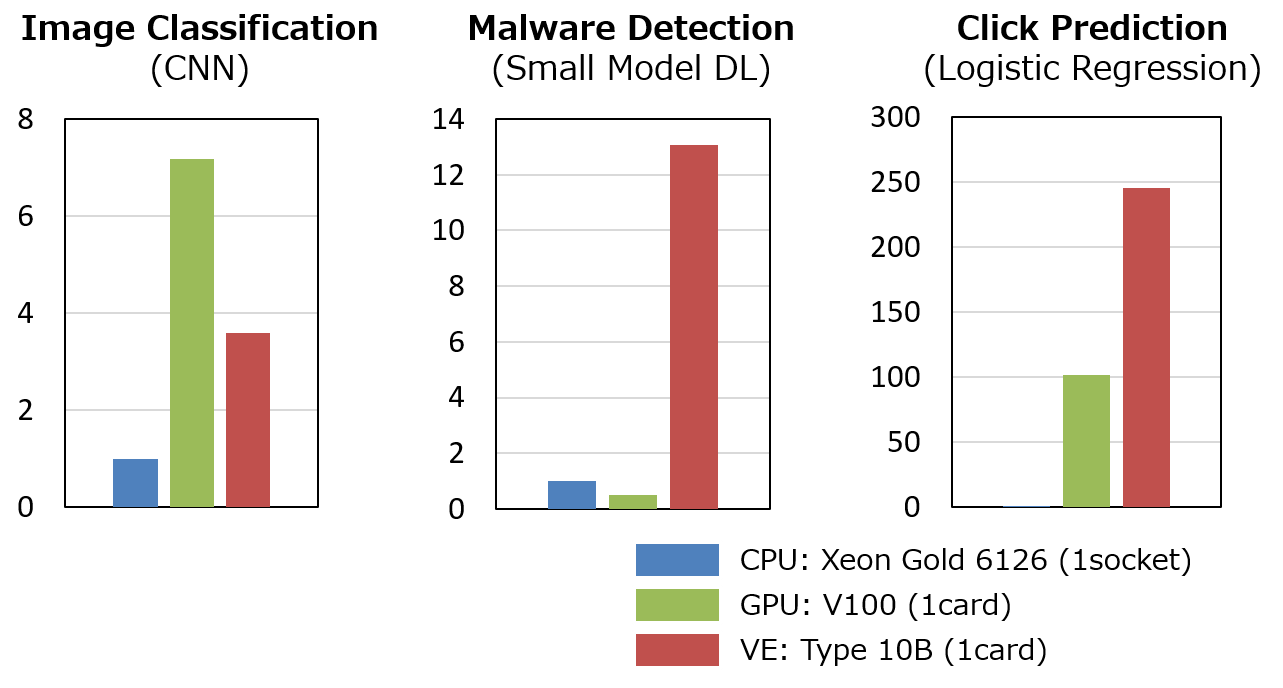

The graphs show relative performance of training on CPU, GPU and VE in SX-Aurora.

The left graph is simple CNN for image classification based on the example in Keras. We have used mnist dataset. As you know, GPU’s high peak computational performance works well for convolution layers, then V100 is the best.

The second one is also deep learning but its model is “small”. As described in here, small model with less number of layers and/or less computational layers is important, for example, for faster inference on mobile devices. But training of such model is still heavy task because of extensive model search to find better hyper parameters, model architecture, etc. In this evaluation, we have used our internal network model to detect malware as an example of such small mode. For a small model, device offload overhead is not negligible. We are developing the technique to reduce such overhead for VE, and experimentally used it in this evaluation. This technique improves performance of VE drastically as shown in the graph. The details will be talked in the workshop SWoPP 2019 in Japan.

The last graph shows the performance of logistic regression. We have developed simple LR model for click prediction and evaluated with the dataset from Kaggle. The kernel of logistic regression is sparse matrix vector multiplication and it is known as memory bandwidth intensive. VE has world highest 1.2TB/s bandwith and outperforms other platforms.

We believe that these results show the great potentail of SX-Aurora for ML other than compute intesive CNN.

P.S.

Our TensorFlow has been mentioned in the presentaion at ISC19!.